Is there anything that artificial intelligence (AI) can’t help us do better, faster, and cheaper? Businesses and the fundraising profession have clearly embraced AI as evidenced by the articles, webinars, and courses springing up to teach us all how to use various AI tools. For example, Coursera has a course on using ChatGPT with Excel to clean up your data and Indiana University Lilly Family School of Philanthropy has a course entitled, “AI & Fundraising: Revolutionizing Your Fundraising Efforts.”

Many good things are coming out of AI models, but there is a dark side, too. Inevitably, bias creeps into our algorithms and decision-making processes. Bias can lead to unfair outcomes, damage an organization’s reputation, and even have legal consequences.

Bias in AI systems can manifest in various forms, including:

- Data Bias: When the training data used to build AI models is not representative of the real world, it can lead to biased predictions and decisions. For example, if your donor data predominantly represents a certain demographic group, your AI system may be biased towards that group.

- Algorithmic Bias: The algorithms used in AI systems may contain biases, either due to the way they were designed or because they learn biased patterns from the data. This can result in unfair or discriminatory outcomes.

- Confirmation Bias: This occurs when AI systems reinforce existing biases present in the data by making recommendations that align with those biases. For example, suggesting donors who are demographically similar to your existing donor base.

- Feedback Loop Bias: AI systems can create feedback loops where biased recommendations lead to biased actions, further reinforcing the bias in the system.

A 2019 article in Harvard Business Review points out that biased programs have been around for some time, citing an example from 35 years ago.

“Back in 1988, the UK Commission for Racial Equality found a British medical school guilty of discrimination. The computer program it was using to determine which applicants would be invited for interviews was determined to be biased against women and those with non-European names. However, the program had been developed to match human admissions decisions, doing so with 90 to 95 percent accuracy.”

(Harvard Business Review, 2019)

Finding Room To Trust AI

If refusing to use or participate in AI generated tools or products won’t stop the world from moving forward with AI, then how can fundraising and prospect research professionals evaluate whether the AI being used or developed is trustworthy?

The first step is to become better educated about the efforts that are or can be taken to identify, mitigate, or remove bias from AI.

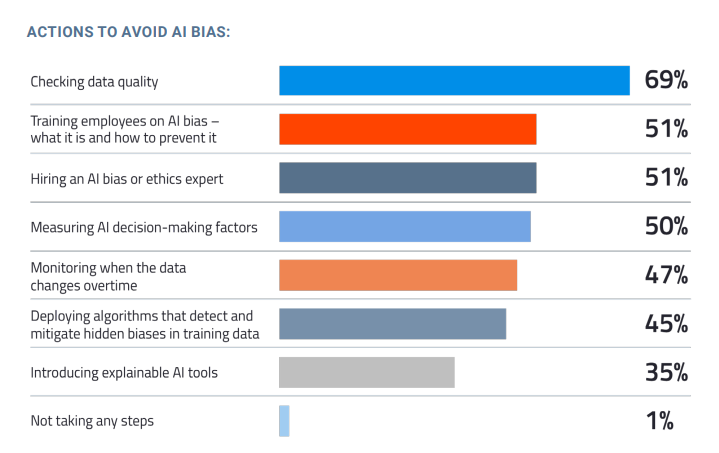

DataRobot, a company that offers an AI platform for businesses, published its second “State of AI Bias Report” in January of 2022. The report found that 7 in 10 respondents are confident in their company’s ability to identify AI bias. Confidence does not equal ability to identify AI bias, but it does suggest that there are deliberate attempts to avoid bias.

(Source: State of AI Bias Report, 2022)

In an interview with Forbes, Ted Kwartler, VP of Trusted AI at DataRobot told Forbes that “good AI” is a multidimensional effort across four distinct personas:

- AI Innovators: Leaders or executives who understand the business and realize that machine learning can help solve problems for their organization

- AI Creators: The machine learning engineers and data scientists who build the models

- AI Implementers: Team members who fit AI into existing tech stacks and put it into production

- AI Consumers: The people who use and monitor AI, including legal and compliance teams who handle risk management”

(Source: Forbes, 2022)

As end-users of AI, fundraising and prospect research professionals can do any number of things to promote ethical and equitable AI:

- Take the time to read and learn about how bias happens in AI

- Ask your vendors how they guard against bias in their products and services.

- Be skeptical of the scores and recommendations from AI tools.

- Champion or volunteer on a task force of diverse practitioners to review new tools.

While AI has the potential to revolutionize fundraising, it also comes with the responsibility to ensure that it does not perpetuate biases or discriminatory practices. Avoiding bias in AI requires a multifaceted approach that includes diverse and representative data, transparent algorithms, ethical considerations, and ongoing vigilance. By understanding how bias in AI works and what can be done to avoid it, we can help our organizations navigate this new terrain while upholding our commitment to fairness and inclusivity.

P.S. I used ChatGPT to help me write this blog post. If you’re curious about this, create a free account on the Prospect Research Institute forums, login, and click this link to join the conversation: ChatGPT et al – any testers out there?

Additional Resources

- What Do We Do About the Biases in AI? | by James Manyika, Jake Silberg, and Brittany Presten | Harvard Business Review | 2019

- The Problem With Biased AIs (and How To Make AI Better) | by Bernard Marr | Forbes | 2022

- State of AI Bias Report | DataRobot | 2022

- Responsible AI: How DonorSearch Is Shaping Nonprofit Tech | DonorSearch | 2023

- The Fundraising.AI Collaborative | An “independent collaborative that exists to understand and promote the development and use of Responsible Artificial Intelligence for Nonprofit Fundraising”

- ChatGPT for Beginners: Save time with Microsoft Excel | Coursera | undated

- AI & Fundraising: Revolutionizing Your Fundraising Efforts | IU Lilly Family School of Philanthropy | 2023